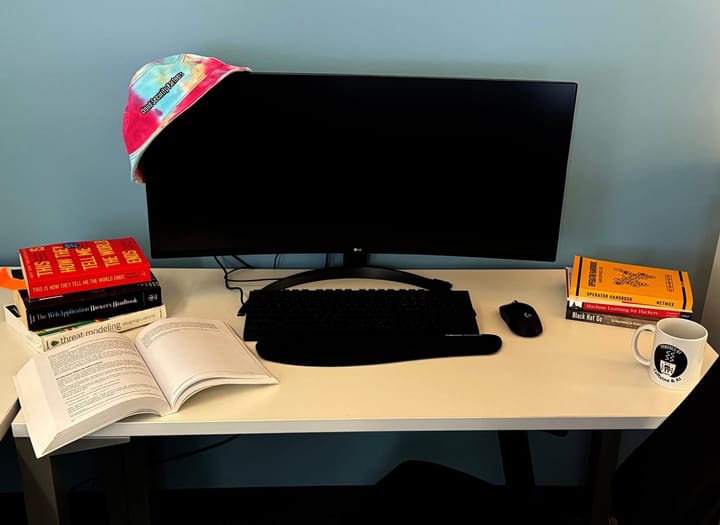

Breaking Into AppSec: Hack Your Way Into Cybersecurity!

Introduction

Breaking into Application Security can feel overwhelming at first. However, it's one of the most rewarding and dynamic fields in cybersecurity. You might be a newcomer to the industry, a software engineer or product manager looking to make a career pivot, or an existing AppSec engineer looking